Senior Technical Consultant @ Fincons

I design and develop cross-platform applications for mobile, web, desktop, and TV, focusing on architecture, performance, and CI/CD pipelines.

I work with technologies like Kotlin Multiplatform, React Native, Xamarin, and Android native, using MVVM, Redux, and reactive programming patterns.

Teacher @ U3 Carate Brianza

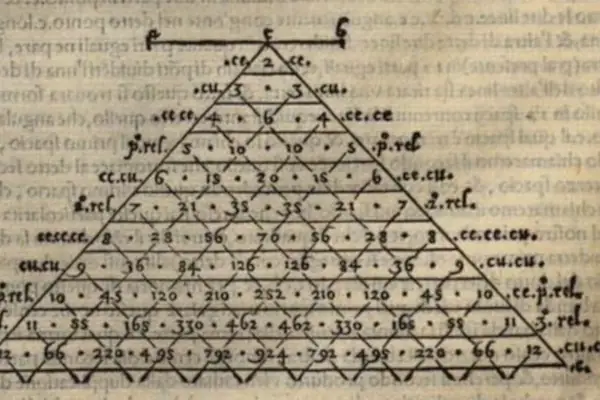

I teach technology-focused courses for a local educational initiative aimed at making complex topics accessible and engaging.

The curriculum blends computing history with emerging technologies, like computer hardware, the future of quantum computing, and artificial intelligence.

Student @ UniMi

I'm currently pursuing a second bachelor's degree in History at the University of Milan, where I previously earned both my BSc and MSc in Computer Science with top marks (110 cum laude).

I'm studying history out of personal interest, driven by a curiosity for how past societies shaped the world we live in today.